Highlights:

- Vortex introduces a GPU-accelerated framework to handle large-scale data analytics beyond GPU memory limitations.

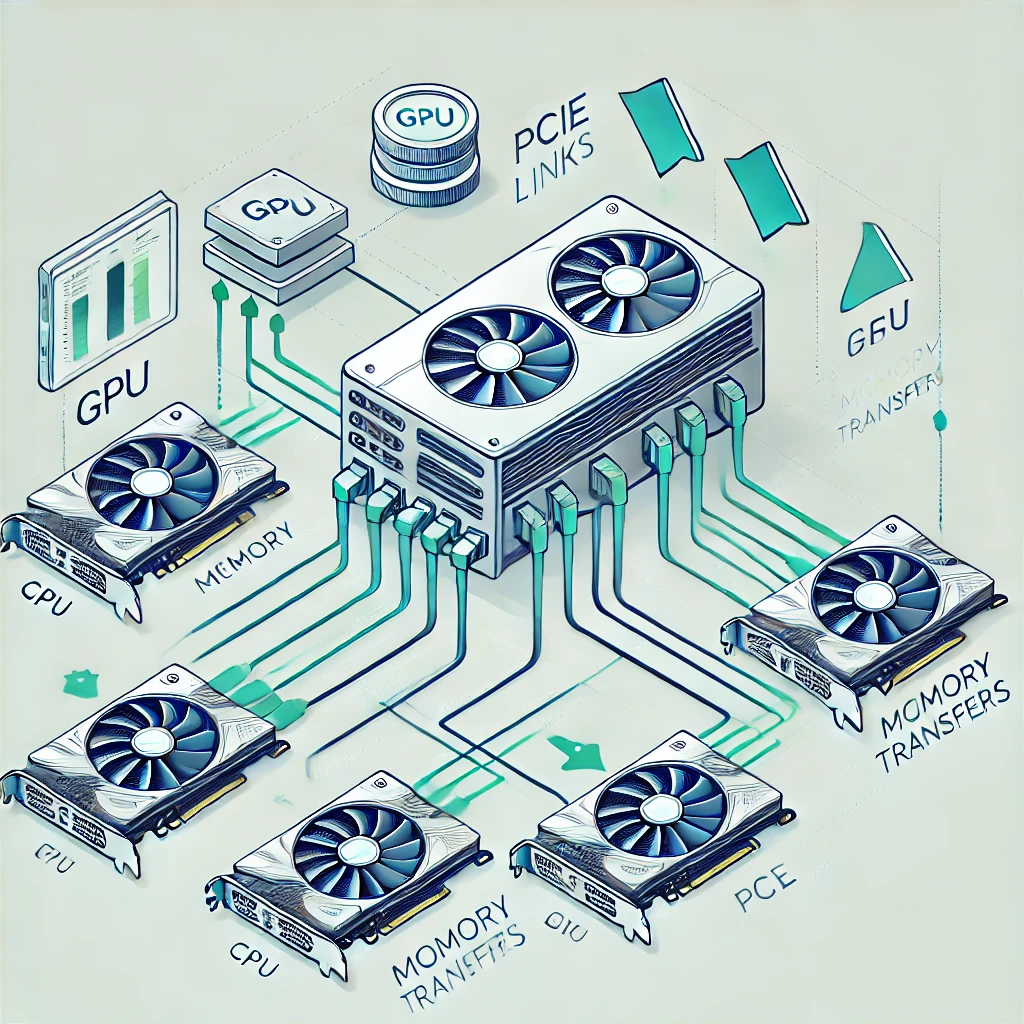

- It leverages unused PCIe bandwidth across multiple GPUs for efficient CPU-GPU data transfers.

- The new IO-decoupled programming model simplifies GPU development and enhances code reuse.

- Benchmarks show Vortex outperforms existing GPU-based solutions by 5.7× and CPU-based databases by 3.4×.

TLDR:

Researchers from the University of Michigan have developed Vortex, a framework that harnesses untapped GPU IO resources to overcome memory constraints in large-scale data analytics. By optimizing data transfer through PCIe links and simplifying GPU programming, Vortex achieves remarkable performance improvements compared to leading GPU and CPU analytics systems.

The GPU Memory Problem: A Roadblock for Big Data

Graphics Processing Units (GPUs) are essential for high-performance computing tasks like data analytics and artificial intelligence (AI). Their massively parallel architecture enables rapid computation. However, limited on-device memory—typically capped at a few hundred gigabytes—often bottlenecks performance when working with datasets that reach terabytes.

The traditional solution of using multiple GPUs or CPU memory streaming runs into other challenges: either GPUs sit idle due to inefficient memory utilization or data transfer speeds lag due to limited PCIe bandwidth.

Vortex, a new GPU-accelerated framework developed by Yichao Yuan, Advait Iyer, Lin Ma, and Nishil Talati at the University of Michigan, offers a breakthrough. The research, set to be presented at the VLDB 2025 conference, proposes a novel approach: redistributing IO resources across GPUs to maximize memory bandwidth while minimizing overhead.

How Vortex Works: Rethinking Data Transfer

The core innovation of Vortex is its ability to use all available PCIe bandwidth in a multi-GPU system. Traditional setups assign distinct GPUs to distinct tasks. Vortex changes the game by allowing GPUs running compute-heavy tasks—like AI inference—to lend their underutilized IO resources to a data analytics GPU.

Imagine a team of runners passing water bottles to a single marathoner: even if they’re not racing, their help can keep the main runner hydrated. Vortex uses similar “help” from idle GPUs to speed up data transfers.

The Three-Layer Architecture

- Optimized IO Primitive:

- Utilizes available PCIe lanes from multiple GPUs for efficient data transfer.

- IO-Decoupled Programming Model:

- Separates GPU kernel design from IO scheduling, simplifying code and enabling kernel reuse.

- Tailored Query Operators:

- Implements GPU-friendly database operations like sorting and joining for better performance.

Key Performance Insights: The Numbers Don’t Lie

The researchers benchmarked Vortex using the Star Schema Benchmark (SSB), a standard test for database performance. The results were impressive:

- 5.7× speedup over Proteus, a state-of-the-art GPU-based analytics system.

- 3.4× faster than the CPU-based DuckDB engine.

- 2.5× better price-performance ratio compared to CPU-only solutions.

In one experiment, Vortex sorted 8 billion integers almost twice as fast as an equivalent single-GPU setup. The system’s optimized IO primitive was able to transfer data at 140 GB/s—a substantial improvement over the 110 GB/s baseline.

Simplifying GPU Development: A Developer-Friendly Model

Writing efficient GPU code is notoriously difficult, often requiring intricate synchronization of compute tasks and memory transfers. Vortex’s IO-decoupled programming model addresses this pain point.

By separating memory scheduling from compute kernel design, developers can write GPU code without micromanaging memory resources. This makes it easier to adapt existing high-performance kernels—like AMD’s rocPRIM or NVIDIA’s CUB—for big data workloads.

“We wanted to reduce the programming burden while maximizing performance,” explains lead author Yichao Yuan. “Vortex makes it possible to write simpler code that still gets top-tier performance.”

Late Materialization: Smarter Data Access

Vortex employs an optimization technique called late materialization to minimize data transfers. Traditional database engines often load entire columns into memory, even if only a fraction of the data is needed.

By using zero-copy memory access, Vortex only pulls in necessary data. Tests showed this technique reduced data transfer time by up to 50% in certain queries.

AI-Friendly Design: Harmony with Compute-Intensive Tasks

AI workloads like LLM inference are typically compute-bound, meaning they use GPU cores more than memory bandwidth. Vortex takes advantage of this by borrowing idle PCIe bandwidth from GPUs running these AI tasks.

During tests, running Vortex alongside Stable Diffusion 3 and LLama-3 inference caused less than 7% slowdown for the AI tasks. This balance ensures that databases and AI models can coexist on the same hardware—a crucial advantage for modern applications that mix AI-powered insights with real-time data analytics.

Future Directions: What’s Next for Vortex?

The team plans to explore ways to scale Vortex for clusters with dozens of GPUs and investigate adaptive IO scheduling techniques for more dynamic workloads.

“We’ve only scratched the surface of what’s possible with shared GPU resources,” says Yuan. “The next step is optimizing across distributed systems and exploring newer memory technologies like CXL.”

Vortex’s source code is available on GitHub for researchers and developers eager to push the boundaries of GPU-accelerated analytics.